8.5. Pgpool-II on Kubernetes

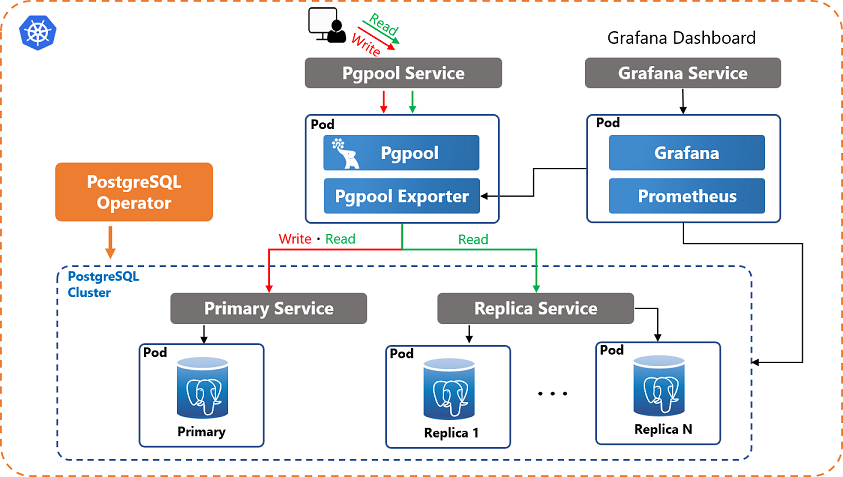

This section explains how to run Pgpool-II to achieve read query load balancing and connection pooling on Kubernetes.

8.5.1. Introduction

Because PostgreSQL is a stateful application and managing PostgreSQL has very specific requirements (e.g. backup, recovery, automatic failover, etc), the built-in functionality of Kubernetes can't handle these tasks. Therefore, an Operator that extends the functionality of the Kubernetes to create and manage PostgreSQL is required.

There are several PostgreSQL operators, such as Crunchy PostgreSQL Operator, Zalando PostgreSQL Operator and KubeDB. However, these operators don't provide query load balancing functionality.

This section explains how to combine PostgreSQL Operator with Pgpool-II to deploy a PostgreSQL cluster with query load balancing and connection pooling capability on Kubernetes. Pgpool-II can be combined with any of the PostgreSQL operators mentioned above.

8.5.3. Prerequisites

Before you start the configuration process, please check the following prerequisites.

Make sure you have a Kubernetes cluster, and kubectl is installed.

PostgreSQL Operator and a PostgreSQL cluster are installed.

8.5.4. Deploy Pgpool-II

Deploy Pgpool-II pod that contains a Pgpool-II container and a Pgpool-II Exporter container.

apiVersion: apps/v1

kind: Deployment

metadata:

name: pgpool

spec:

replicas: 1

selector:

matchLabels:

app: pgpool

template:

metadata:

labels:

app: pgpool

spec:

containers:

- name: pgpool

image: pgpool/pgpool:4.2

...

- name: pgpool-stats

image: pgpool/pgpool2_exporter:1.0

...

Pgpool-II's health check, automatic failover, Watchdog and online recovery features aren't required on Kubernetes. You need to only enable load balancing and connection pooling.

The Pgpool-II pod should work with the minimal configuration below:

backend_hostname0='primary service name' backend_hostname1='replica service name' backend_port0='5432' backend_port1='5432' backend_flag0='ALWAYS_PRIMARY|DISALLOW_TO_FAILOVER' backend_flag1='DISALLOW_TO_FAILOVER' sr_check_period = 10 sr_check_user='PostgreSQL user name' load_balance_mode = on connection_cache = on listen_addresses = '*'

There are two ways you can configure Pgpool-II.

Using environment variables

Using a ConfigMap

You may need to configure client authentication and more parameters in a production environment. In a production environment, we recommend using a ConfigMap to configure Pgpool-II's config files, i.e. pgpool.conf, pcp.conf, pool_passwd and pool_hba.conf.

The following sections explain how to configure and deploy Pgpool-II pod using environment variables and ConfigMap respectively. You can download the various manifest files used for deploying Pgpool-II from here.

8.5.4.1. Configure Pgpool-II using environment variables

Kubernetes environment variables can be passed to a container in a pod. You can define environment variables in the deployment manifest to configure Pgpool-II's parameters. pgpool_deploy.yaml is an example of a Deployment manifest. You can download pgpool_deploy.yaml and specify environment variables in this manifest file.

$ curl -LO https://raw.githubusercontent.com/pgpool/pgpool2_on_k8s/master/pgpool_deploy.yaml

Environment variables starting with PGPOOL_PARAMS_ can be converted to Pgpool-II's configuration parameters and these values can override the default configurations.

The Pgpool-II container Docker images is build with streaming replication mode. By default, load balancing, connection pooling and streaming replication check is enabled.

Specify only two backend nodes. Specify the Primary Service name to backend_hostname0. Specify the Replica Service name to backend_hostname1. Because failover is managed by Kubernetes, specify DISALLOW_TO_FAILOVER flag to backend_flag for both of the two nodes and ALWAYS_PRIMARY flag to backend_flag0. Configure appropriate backend_weight as usual. You don't need to specify backend_data_directory.

For example, the following environment variables defined in manifest,

env: - name: PGPOOL_PARAMS_BACKEND_HOSTNAME0 value: "hippo" - name: PGPOOL_PARAMS_BACKEND_HOSTNAME1 value: "hippo-replica" - name: PGPOOL_PARAMS_BACKEND_FLAG0 value: "ALWAYS_PRIMARY|DISALLOW_TO_FAILOVER" - name: PGPOOL_PARAMS_BACKEND_FLAG1 value: "DISALLOW_TO_FAILOVER"will be convert to the following configuration parameters in pgpool.conf.

backend_hostname0='hippo' backend_hostname1='hippo-replica' backend_flag0='ALWAYS_PRIMARY|DISALLOW_TO_FAILOVER' backend_flag1='DISALLOW_TO_FAILOVER'Specify a PostgreSQL user name and password to perform streaming replication check. For the security reasons, we recommend that you specify a encrypted password.

- name: PGPOOL_PARAMS_SR_CHECK_USER value: "PostgreSQL user name" - name: PGPOOL_PARAMS_SR_CHECK_PASSWORD value: "encrypted PostgreSQL user's password"Alternatively, you can create a secret and use this secret as environment variables.

Since health check is performed by Kubernetes, Pgpool-II's health check should be disabled. Because the default value is off, we don't need to set this parameter.

By default, the following environment variables will be set when Pgpool-II container is started.

export PGPOOL_PARAMS_LISTEN_ADDRESSES=* export PGPOOL_PARAMS_SR_CHECK_USER=${POSTGRES_USER:-"postgres"} export PGPOOL_PARAMS_SOCKET_DIR=/var/run/postgresql export PGPOOL_PARAMS_PCP_SOCKET_DIR=/var/run/postgresql

8.5.4.2. Configure Pgpool-II using ConfigMap

Alternatively, you can use a Kubernetes ConfigMap to store entire configuration files, i.e. pgpool.conf, pcp.conf, pool_passwd and pool_hba.conf. The ConfigMap can be mounted to Pgpool-II's container as a volume.

You can download the example manifest files that define the ConfigMap and Deployment from repository.

curl -LO https://raw.githubusercontent.com/pgpool/pgpool2_on_k8s/master/pgpool_configmap.yaml curl -LO https://raw.githubusercontent.com/pgpool/pgpool2_on_k8s/master/pgpool_deploy_with_mount_configmap.yaml

The manifest that defines the ConfigMap is in the following format. You can update it based on your configuration preferences.

apiVersion: v1

kind: ConfigMap

metadata:

name: pgpool-config

labels:

app: pgpool-config

data:

pgpool.conf: |-

listen_addresses = '*'

port = 9999

socket_dir = '/var/run/postgresql'

pcp_listen_addresses = '*'

pcp_port = 9898

pcp_socket_dir = '/var/run/postgresql'

backend_hostname0 = 'hippo'

backend_port0 = 5432

backend_weight0 = 1

backend_flag0 = 'ALWAYS_PRIMARY|DISALLOW_TO_FAILOVER'

backend_hostname1 = 'hippo-replica'

backend_port1 = 5432

backend_weight1 = 1

backend_flag1 = 'DISALLOW_TO_FAILOVER'

sr_check_user = 'postgres'

sr_check_period = 10

enable_pool_hba = on

master_slave_mode = on

num_init_children = 32

max_pool = 4

child_life_time = 300

child_max_connections = 0

connection_life_time = 0

client_idle_limit = 0

connection_cache = on

load_balance_mode = on

pcp.conf: |-

postgres:e8a48653851e28c69d0506508fb27fc5

pool_passwd: |-

postgres:md53175bce1d3201d16594cebf9d7eb3f9d

pool_hba.conf: |-

local all all trust

host all all 127.0.0.1/32 trust

host all all ::1/128 trust

host all all 0.0.0.0/0 md5

First, you need to create the ConfigMap before referencing it to Pgpool-II pod.

kubectl apply -f pgpool_configmap.yaml

Once you have created the ConfigMap, you can deploy Pgpool-II pod and mount the ConfigMap to Pgpool-II pod as a volume.

apiVersion: apps/v1

kind: Deployment

metadata:

name: pgpool

...

volumeMounts:

- name: pgpool-config

mountPath: /usr/local/pgpool-II/etc

...

volumes:

- name: pgpool-config

configMap:

name: pgpool-config

pgpool_deploy_with_mount_configmap.yaml is an example of a Deployment manifest that mounts the created ConfigMap to the Pgpool-II pod.

kubectl apply -f pgpool_deploy_with_mount_configmap.yaml

After deploying Pgpool-II, you can see the Pgpool-II pod and services using kubectl get pod and kubectl get svc command.